|

I am currently a Research Engineer of (Meta) Reality Labs Research. Prior to that, I obtained the Ph.D. degree from The Chinese University of Hong Kong, Multimedia Laboratory, in September 2021, advised by Prof. Xiaoou Tang and Prof. Chen Change Loy. I received my B.E from computer science and technology department of Tsinghua University in 2016. I interned in Facebook AI Research (FAIR) with Dr. Hanbyul Joo and Dr. Takaaki Shiratori in 2020 Spring. Earlier, I worked as a computer vision researcher in Sensetime from Apr. 2015 to Aug. 2017. My research interests include computer vision, computer graphics, and machine learning. Especially, I am interested in virtual humans, including 3D face, hand, and body reconstruction. Email / Github / Google Scholar / LinkedIn / CV |

|

|

Mar. 2022 - Now, (Meta) Reality Labs Research Research Engineer. Pittsburgh, PA, U.S. Currently, I am working in (Meta) Reality Labs Research to build 3D Face Avatar for AR/VR equipments. |

|

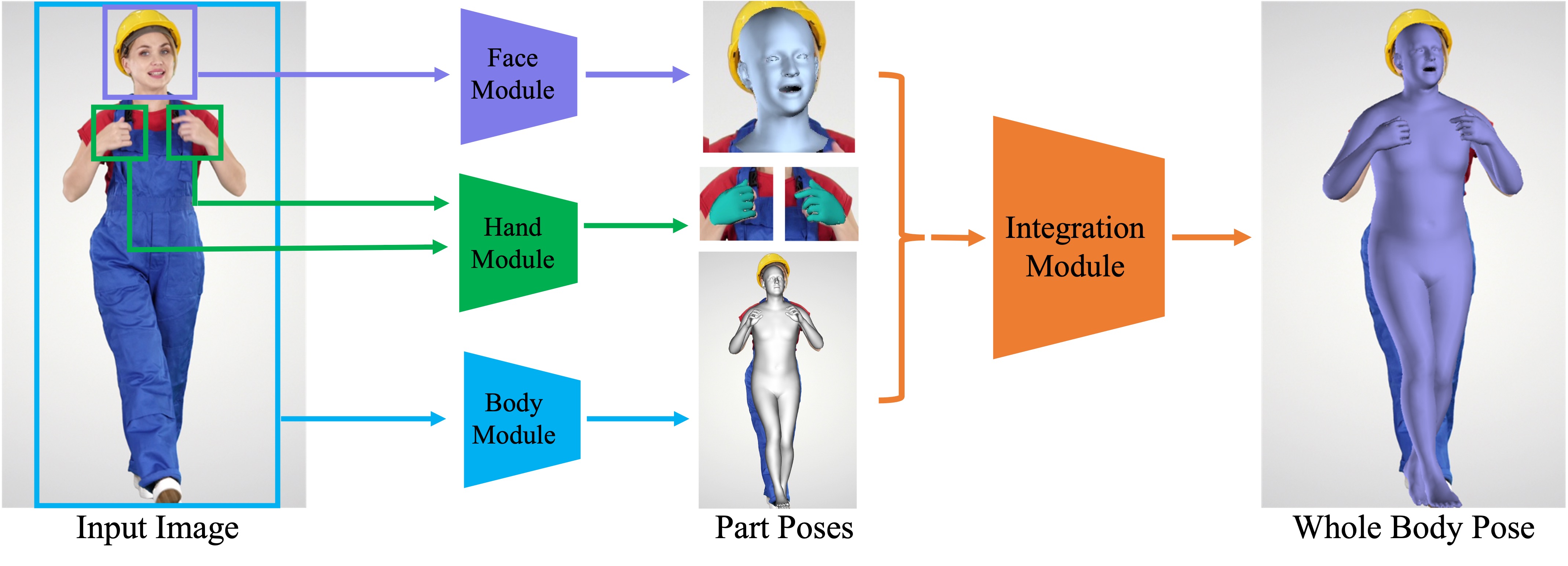

Jan. 2020 - May. 2020, Facebook AI Research (FAIR) Research Intern. Menlo Park, CA, U.S. We use SMPL-X to represent 3D hands and bodies and adopt separate modules for predicting independent hand and body motion first. Hand and body motion predictions are then combined and finetuned to get unified hand and body motion results. Our model runs 10x faster than previous methods with better performance on challenging in-the-wild scenarios with motion blur. |

|

Apr. 2015 - Jul. 2017, SenseTime Research Computer Vision Researcher. Beijing, China. During my working in sensetime, I majorly took part in research projects in OCR and face recognition. These projects including but not limited to: ID card recognition (Given an image of ID card, recognize important information like name, address); text detection for in-the-wild scenes (using modified RPN); a remote training system which can train and evaluate automatically to produce a face verification model. |

|

July. 2017 - September 2021, The Chinese Unviersity of Hong Kong |

|

Aug. 2012 - Jul. 2016 , Tsinghua University |

|

|

|

|

|

|

|

|

|

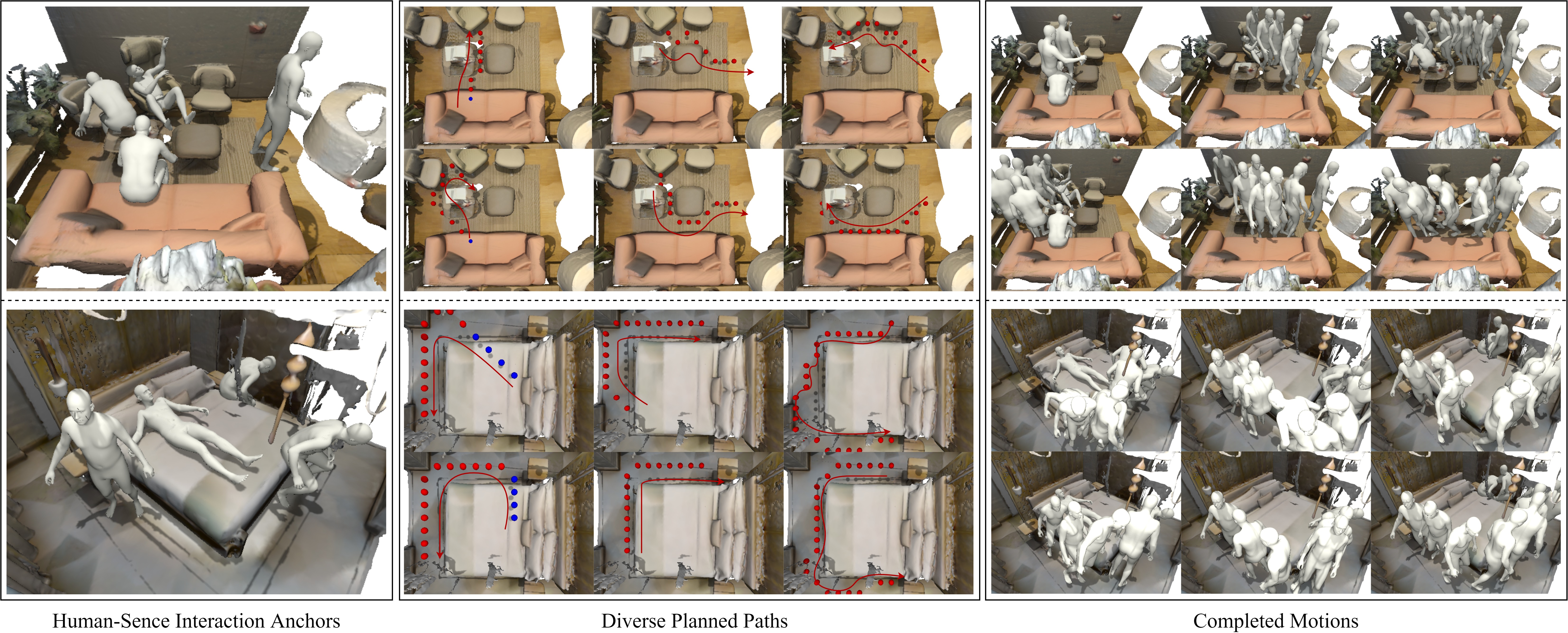

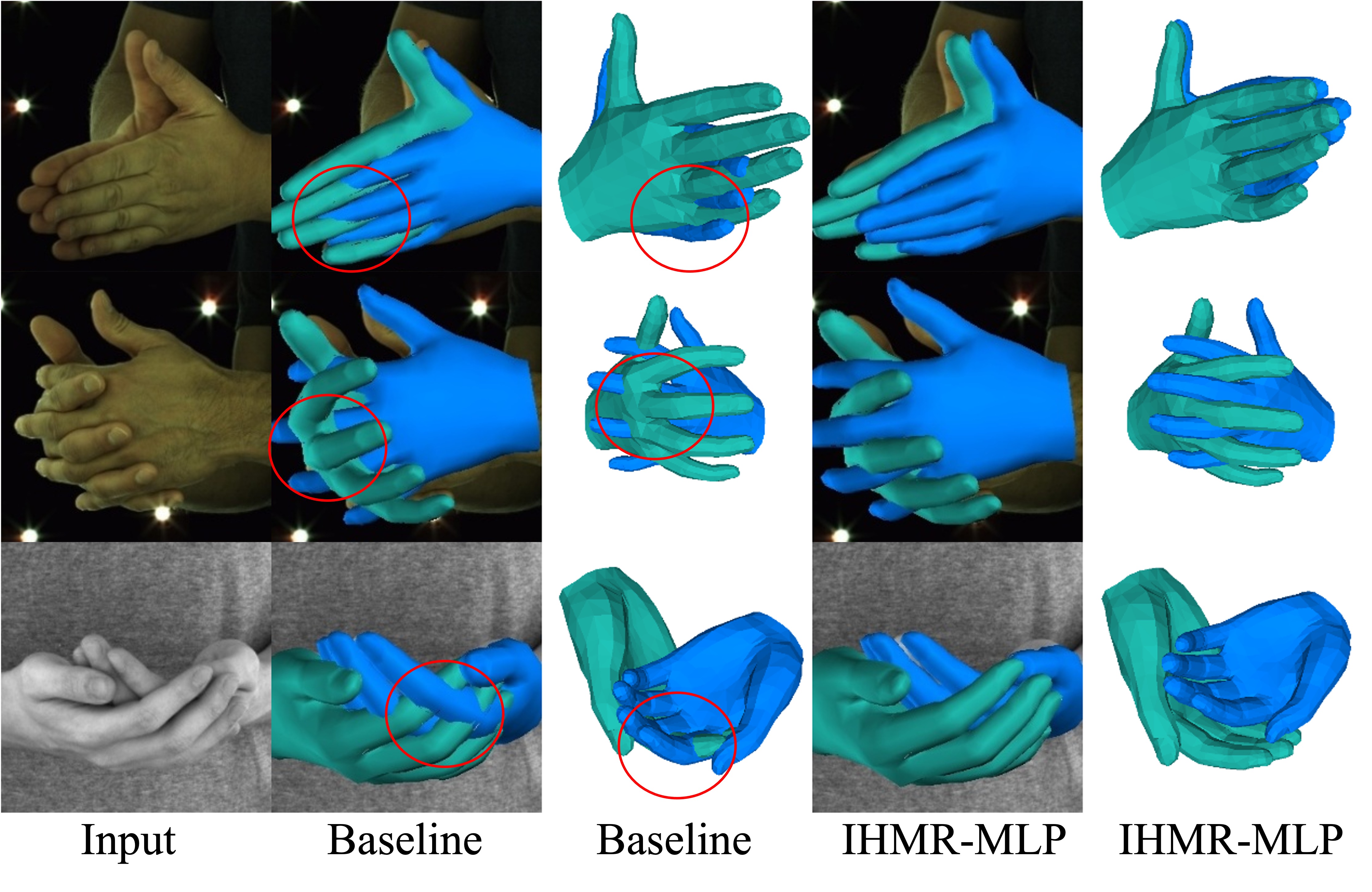

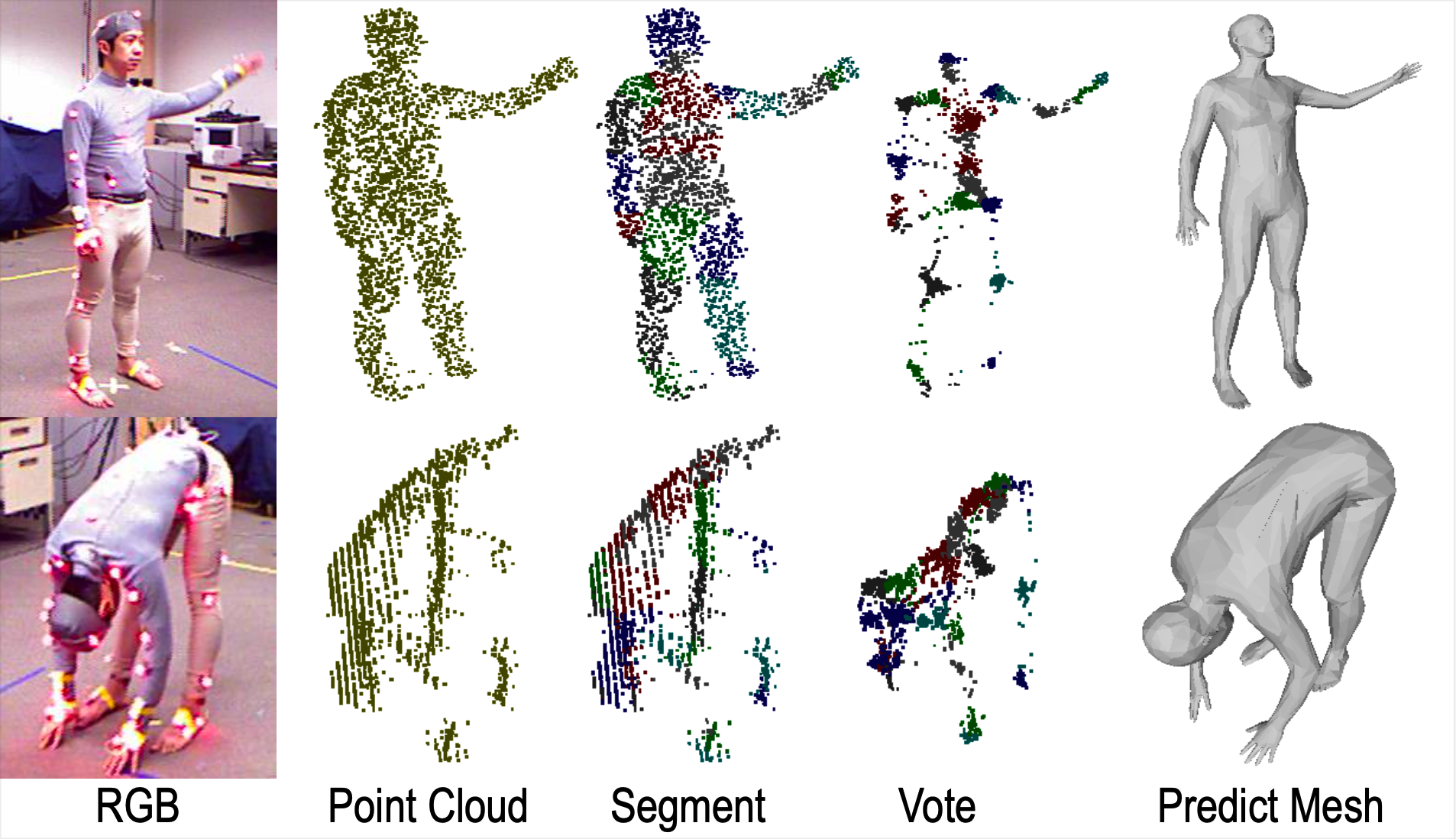

We design a novel framework to increase the 3D human mocap accuracy for challenging poses. |

|

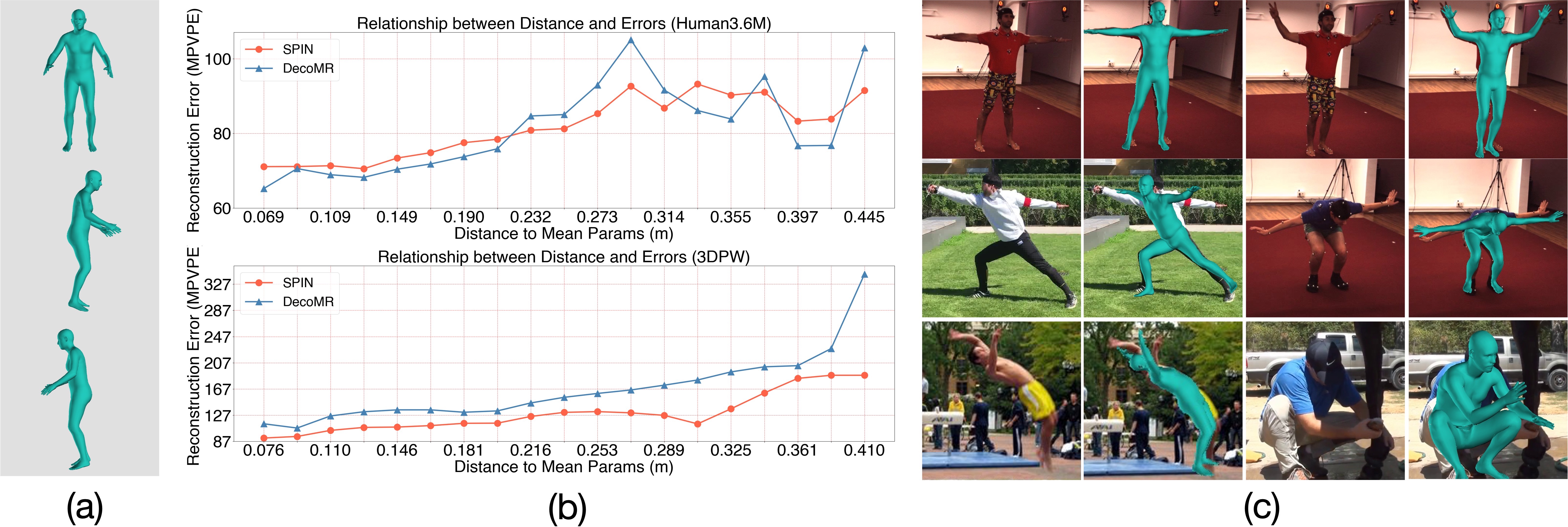

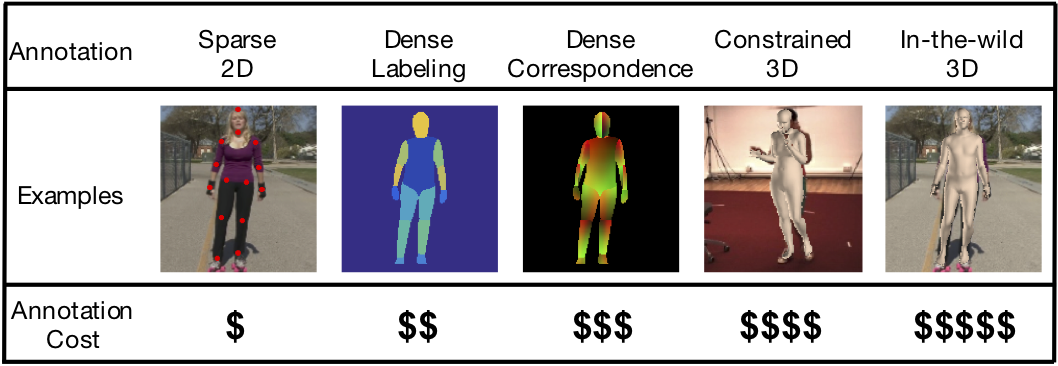

We provided sufficient investigation of annotation design for in-the-wild 3D human reconstruction. |

|

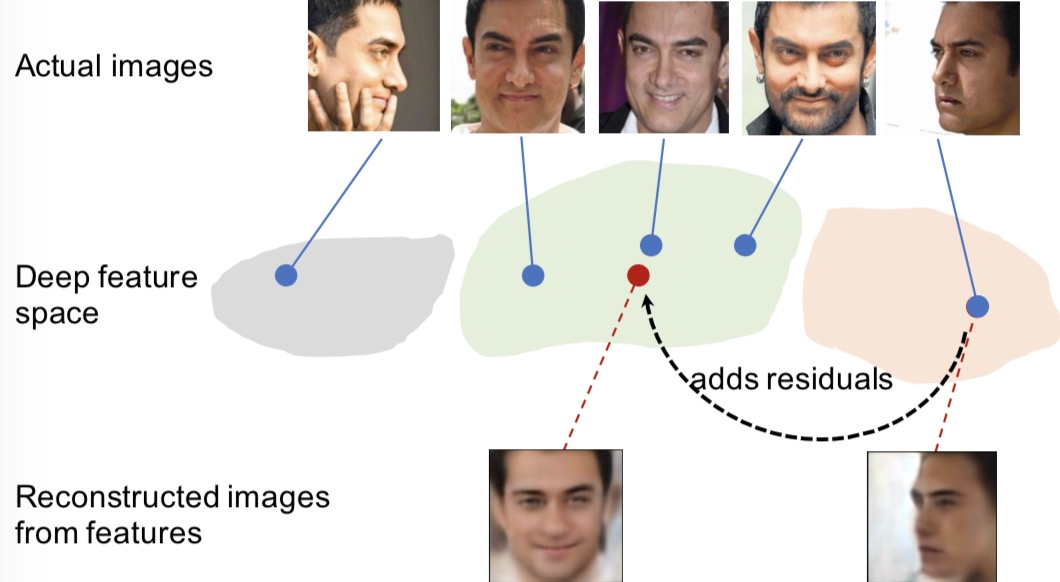

We presented a Deep Residual EquivAriant Mapping (DREAM) block to improve the performance of face recognition on profile faces. |

|

|